Theory

Linear scaling method

The linear scaling method we use is based on the expansion of the quantities of interest we want to study in terms of Chebyshev polynomials. These polynomials have been routinely employed in chemistry and physics and exhibit greater accuracy compared to other sets of orthogonal polynomials. In what follows, we give a brief introduction to the topic. For more details, see this reference.

The expansion of a function in terms of Chebyshev polynomials of the first kind reads where is the n-th Chebyshev polynomial of the first kind and is its corresponding moment. The Chebyshev polynomials benefit from the following advantageous recursion relation

Although the above-mentioned expansion is correct and efficient, it can be problematic when evaluating the moments due to the form of the denominator. A considerable simplification can be introduced by working with the modified orthogonal functions and the corresponding dot product:

Rewriting the expansion in this modified framework yields

which, as we will see later, allows for an efficient recursive calculation of the moments.

For our purposes, the quantities we aim to represent using the Chebyshev polynomials basis are functions of the Hamiltonian rather than of a simple independent variable. Before expressing these functions as a Chebyshev expansion, it is important to ensure that the spectrum of the Hamiltonian lies within the interval , as the expansion is only defined there. If needed, the Hamiltonian should be rescaled by

where is the center of the original spectrum and is its full width. For simplicity, in what follows, we omit the ~ sign on the Hamiltonian; however, we will always refer to the scaled Hamiltonian. The results obtained will need to be appropriately scaled back to fit the original spectrum, hence the Hamiltonian 1. The quantities of interest, which are functions of the (rescaled) Hamiltonian, can be expressed as a Chebyshev series by observing

where the set consists of the single-particle eigenfunctions of the Hamiltonian with eigenvalues . As an example, let us explicitly write the expansion of the density of states, i.e.

The operator to be traced is, as we just pointed out, a function of the Hamiltonian. By inserting the resolution of the identity and using the manipulations shown above, we obtain

The expansion of the density of states in terms of Chebyshev moments is then

This expression, though exact, is not particularly convenient, as it requires knowledge of all single-particle eigenfunctions . This would defeat the purpose of any other calculation, since knowledge of these eigenstates and their corresponding eigenvalues defines any property of the system 2. Nonetheless, the form presented above is used in our code to evaluate specific matrix elements of functions of the Hamiltonian - e.g., Green’s function matrix elements in the site basis, for which only a single evaluation is necessary. Fortunately, the calculation of the trace can be approximated in an efficient and accurate way through a stochastic evaluation 3 4. In this approach, the trace of a general single-particle operator is approximated as

where the set of consists of random states constructed from independent and identically distributed random coefficients with zero mean. The vectors are normalized to 1, which ensures that they can indeed be considered states for the purpose of our calculations. This means, however, that when evaluating quantities such as the density of states, the average over the random states will be normalized to 1 rather than to the number of particles. In terms of accuracy, it has been shown 5 that the error of the approximation is proportional to , where N is the system size and R is the number of random vectors employed in the stochastic evaluation. This works in our favor, as the accuracy increases with the system size - so much so that, for a system with a billion sites, averaging over only a few states is sufficient for most purposes 6: in this case .

Kubo formula for the conductivity

For the analysis of linear response, we use a method based on the Kubo formula. In this context, we focus on the case of responses to electrical signals. According to the Kubo formula, the response of an operator to a zero-frequency (DC) perturbation is givem by

where is the volume of the system, is the intrinsic lifetime, is the equilibrium density, is the DC perturbation, and is the current operator. If the general operator , we have

where the volume has been ‘absorbed’ into the current, and we are considering the spatially averaged quantity. According to Ohm’s law, the quantity in the brackets in the equation above represents the DC conductivity . This formula simplifies considerably in the non-interacting case. Following the steps detailed in 7, we can rewrite the above equation as

where the Fermi occupation function and the denominator of the fraction come from the density matrix. Additionally, the following property has been used

where are the single particle eigenfunction of the Hamiltonian with energy . Rewriting this equation in terms of Green’s functions, one obtains

where are the retarded and advanced Green’s functions. This formula is referred to as the Kubo-Bastin formula and is equivalent to the Kubo formula for non-interacting systems.

If we focus on the conductivity, i.e. , where q is the carrier charge and is the velocity operator, then with some simple manipulations, we arrive at

where we make use of . Integrating the last equation by parts leads to

where the derivative of the Fermi function acts as a window, selecting the energies around the chemical potential that contribute to the dissipative conductivity, as expected. The zero-temperature limit of the above equation is the so-called Kubo-Greenwood formula

where we make use of the identity . For simplicity, the direction of the velocity operators has been omitted, but for calculating any element of the conductivity tensor, one simply needs to use the velocity operators in the corresponding directions, i.e.

This formula can then be expanded in terms of Chebyshev polynomials by transforming the delta functions back into Green’s functions and evaluating the corresponding moments for the stochastic samples, as shown in 7, with the only difference being the normalization of the random states and, consequently, the volume prefactor 8.

Velocity operator

As shown in the previous sections, to compute the conductivity of the

system, we need the velocity operator. In this section, we demonstrate how this

is handled when the system is initialized via the Lattice Validator input.

The velocity operator can be computed using Heisenberg’s equation of motion,

, where is the

position operator. While this is, in principle, a simple operation

requiring two matrix-matrix multiplications and an addition, it becomes

ill-defined for generic boundary conditions other than hard-wall. In

fact, the position operator cannot be defined for periodic systems, and

the above-mentioned formula fails. This problem, however, can be circumvented by

using the 'distance' information provided by the various bonds that make

up the Hamiltonian. Specifically, the translation vector associated with each bond will

give us information about the real-space displacement between the two unit cells it

connects, i.e. all the spatial information we need to compute the commutator .

The periodic boundary conditions are then automatically handled

when implementing the recipe given by the translation associated with

each bond to construct the full operator, much like the construction of

the Hamiltonian. Note that it is not important where spatial origin is

set, as it would simply introduce a contribution proportional to

the identity in the position operator, which does not contribute to the

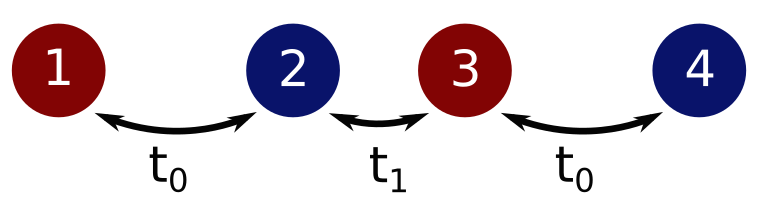

value of the commutator. As an example, let us consider the case of an

unevenly connected chain, as shown in the following figure:

This system has the following Hamiltonian (on the lattice)

Considering a unit cell made of two different sites, this Hamiltonian can equivalently be represented by the following bond information

where the vector represents the translation in terms of unit cells associated with the bond (hence is the bond within the unit cell), and are matrices spanning the different sites of the unit cell, indicating the connections within the different sites and their corresponding strengths. Since the Hamiltonian is the sum of the contributions from the two bonds, we can sum the velocity contributions associated with each bond. In this system only the x-component of the velocity operator will be non-zero. For the trivial translation associated with the bond , the commutator can be directly evaluated, where is the first bond Hamiltonian from the previous equation and the matrix is simply the position operator within the unit cell. For the second bond, a similar approach can be used, but care must be taken with the position operator. Let us now consider the subspace made of the original unit cell and the cell reached by the translation , i.e. the displacement associated with the bond

The position operator in the x-direction, in the site basis within this subspace 9, reads:

where is the null matrix, is the position in the x-direction of the site and is the displacement in the x-direction corresponding to the lattice vector. The matrices and are the position operators in the original unit cell and the displaced one, respectively. Note that, in general, the displacement vector is

where is the translation associated with the bond for the i-th lattice vector . Our example is the trivial case of a translation by a single lattice vector of magnitude one. In the same subspace, the Hamiltonian contribution of the (directional) bond is

The position of non-zero block is determined by the directionality of the bond definition: if the translation were , i.e. hopping to the left, the non-zero block would be the lower left one. In terms of the block representation, it is straightforward to evaluate the commutator

where the superscript denotes that the contribution to the corresponding quantities comes from the bond . Since the block structure of the resulting contribution to the velocity operator is the same as the bond, one can construct the velocity associated with each bond as , and distribute these across the whole system in the same way that the Hamiltonian is constructed. The storage strategy will be the same as well, simply keeping track of the (intra-)inter-cell velocity contributions and the associated translations. In this sense, the boundary conditions do not represent a problem from this perspective, because the correct behavior of the velocity operator is imposed by means of the distribution functions, i.e. how the sub-blocks are repeated in the matrix corresponding to the whole system.

Transport properties

HQS Qolossal offers the possibility of calculating carrier mobility and diffusion coefficient. These are quantities derived from the DC conductivity and the density of states.

Carrier mobility

with e electron charge, the electron (hole) density, the DC conductivity and, finally, the mobility.

Diffusion coefficient with the DC conductivity, the density of states and the diffusion coefficient. All quantities refer to their values at the specified chemical potential .

Optical properties

The optical electrical conductivity is computed via the Chebyshev expansion of the well-known Kubo-Bastin formula10

with the fermi function, the velocity operator and the components of the Greens function. This is expanded into Chebyshev polynomials the same way as for the DC conductivity. All other optical properties are derived from the optical conductivity. Below all definitions.

Permittivity

Reflectivity Absorption coefficient

Bibliography

-

This means that any prefactor coming from the rescaling of the Hamiltonian has to be included. For example, the expansion of the density of states will be presented later in the text. The delta function contained in its definition will yield . The factor will have to be included in the final rescaling to obtain the correct result. ↩

-

Aside from representing a computational effort which scales like the cubic power (or square, if the Hamiltonian is sparse) of the system's size, since it is the diagonalization of the whole Hamiltonian. ↩

-

Drabold, David A., and Otto F. Sankey. 1993. “Maximum Entropy Approach for Linear Scaling in the Electronic Structure Problem.” Phys. Rev. Lett. 70 (June): 3631–34. https://doi.org/10.1103/PhysRevLett.70.3631. ↩

-

Silver, R. N., and H. Röder. 1994. “DENSITIES OF STATES OF MEGA-DIMENSIONAL HAMILTONIAN MATRICES.” International Journal of Modern Physics C 05 (04): 735–53. https://doi.org/10.1142/S0129183194000842. ↩

-

Weiße, Alexander, Gerhard Wellein, Andreas Alvermann, and Holger Fehske. 2006. “The Kernel Polynomial Method.” Rev. Mod. Phys. 78 (March): 275–306. https://doi.org/10.1103/RevModPhys.78.275. ↩

-

The appropriate number of random states to average over also depends on the order of the expansion: the higher the number of moments, the lower the frequency resolution of the expansion, hence the larger the sampling pool must become. ↩

-

Fan, Zheyong, José H. Garcia, Aron W. Cummings, Jose Eduardo Barrios-Vargas, Michel Panhans, Ari Harju, Frank Ortmann, and Stephan Roche. 2021. “Linear Scaling Quantum Transport Methodologies.” Physics Reports 903: 1–69. https://doi.org/https://doi.org/10.1016/j.physrep.2020.12.001. ↩ ↩2

-

The corresponding volume will be the volume of the unit cell instead of the total volume. ↩

-

Note that, in this case, the subspace mentioned is equivalent to the whole system, but in any case with more than two unit cells, this will not be the case. ↩

-

Jose H. Garcı́a, Lucian Covaci, and Tatiana G. Rappoport. 2015. "Real-space calculation of the conductivity tensor for disordered topological matter". Phys. Rev. Lett., 114, p. 116602. https://doi.org/10.1103/PhysRevLett.114.116602. ↩